2025

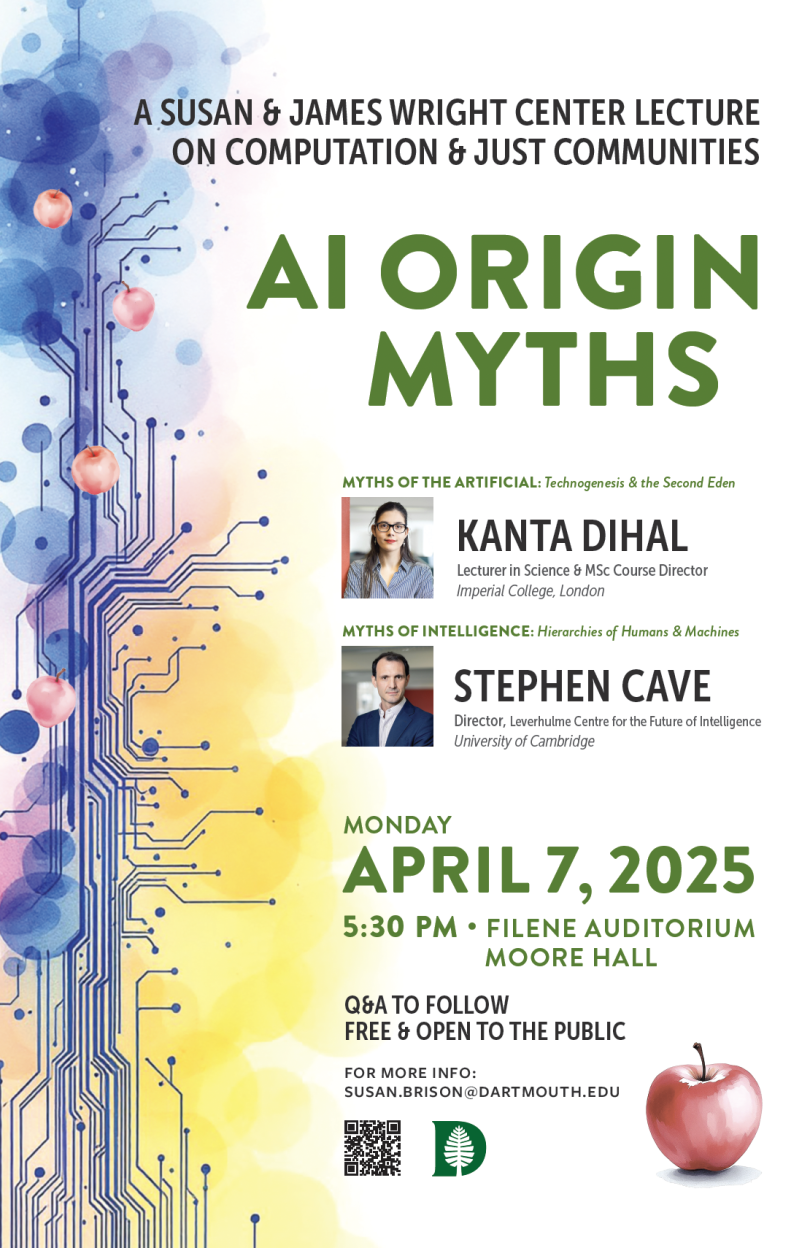

A Susan and James Wright Center Lecture on Computation and Just Communities

Kanta Dihal & Stephen Cave

AI Origin Myths

April 7, 2025, 5:30pm in person at the Filene Auditorium, Moore Hall, Dartmouth College.

OR join us on Dartmouth Youtube virtually

Free and open to the public with Q&A to follow

Abstract and Bios below

Session abstract:

AI is surrounded by a rich mythology that long predates the invention of the technology itself. Stephen Cave and Kanta Dihal will explore two origin myths of AI which continue to shape our hopes and fears for thinking machines today. The first pertains to the artificial, and the dream of using technology to create a paradise on earth. The other concerns intelligence as a singular faculty of ultimate importance.

Talk 1:

Myths of the artificial: technogenesis and the second Eden by Kanta Dihal

Abstract

From the Enlightenment period onwards, the Christian West increasingly began to consider technology as the means to build a new Eden, an artificial paradise. Technological progress came to be seen not only as a way to improve lives, but as a defining characteristic of humanity, a concept called ‘technogenesis’. However, this inspired the notion that a lack of technological progress equates to inferiority, a key justification of colonial exploitation. Today, AI is imagined as the key to the new Eden. This talk asks whether it will replicate an ideology of subjugation.

Talk 2:

Myths of intelligence: hierarchies of humans and machines by Stephen Cave

The AI revolution is predicated on the primacy of intelligence: the idea that it is a singular faculty of critical importance to the trajectory of civilization. But, like the idea of technological progress mentioned above, this idea has its origins in the logic of colonialism, and was instrumental in creating racialised, gendered and class-based hierarchies of the human. This short talk explores how this dark history of intelligence shapes the hopes and fears we have for AI.

Bios

Kanta Dihal is Lecturer in Science Communication at Imperial College London, where she is Course Director of the MSc in Science Communication, and currently a Visiting Research Fellow at Google. Her research focuses on science narratives, particularly science fiction, and how they shape public perceptions and scientific development. She is co-editor of the books AI Narratives (2020) and Imagining AI (2023). She holds a DPhil from the University of Oxford on the communication of quantum physics.

Stephen Cave is Director of the Leverhulme Centre for the Future of Intelligence at the University of Cambridge. His research focuses on philosophy and ethics of technology, particularly AI, robotics and life-extension. He is the author of Immortality (Crown, 2012), a New Scientist book of the year, and Should You Choose To Live Forever: A Debate(with John Martin Fischer, Routledge, 2023); and co-editor of AI Narratives (OUP, 2020), Feminist AI (OUP, 2023) and Imagining AI (OUP, 2023).

2024

Claire Benn: Deep Fakes, Pornography, and Consent

Monday, August 5, 3:30pm | Haldeman 41

Political deepfakes have prompted outcry about the diminishing trustworthiness of visual depictions, and the epistemic and political threat this poses. Yet this new technique is being used overwhelmingly to create pornography, raising the question of what, if anything, is wrong with the creation of deepfake pornography. Traditional objections focusing on the sexual abuse of those depicted fail to apply to deepfakes. Other objections—that the use and consumption of pornography harms the viewer or other (non-depicted) individuals—fail to explain the objection that a depicted person might have to the creation of deepfake pornography that utilizes images of them. My argument offers such an explanation. It begins by noting that the creation of sexual images requires an act of consent, separate from any consent needed for the acts depicted. Once we have separated these out, we can see that a demand for consent can arise when a sexual image is of us, even when no sexual activity was actually engaged in, as in the case of deepfake pornography. I then demonstrate that there are two ways in which an image can be ‘of us’, both of which can exist in the case of deepfakes. Thus, I argue: if a person, their likeness, or their photograph is used to create pornography, their consent is required. Whenever the person depicted does not consent (or in the case of children, can’t consent), that person is wronged by the creation of deepfake pornography and has a claim against its production.

Arvind Narayanan: How to Think about AI and Existential Risk

Monday, July 22, 3:30pm | Haldeman 41

Catastrophic and existential risks from AI must be taken seriously. But the current existential risk discourse is dominated by speculation. In this talk, I’ll dissect a series of fallacies that have led to alarmist conclusions, including a misapplication of probability, a mischaracterization of human intelligence, and a conflation of AI models with AI systems. I will argue that we already have the tools to address risks calmly and collectively and provide a set of recommendations for how to do so.

This talk is based on a chapter of the forthcoming book AI Snake Oil by Arvind Narayanan and Sayash Kapoor.

Renée Jorgensen: Fairness and Bias in Predictive Policing

Monday, July 15, 3:30pm | Haldeman 41

Over the last decade, many police departments have adopted (and some have abandoned) a suite of algorithmic tools designed to help law enforcement proactively prevent crime. This talk evaluates whether location-based tools (e.g as PredPol/SoundThinking) can be used in a way that is adequately fair. I argue that because they concentrate the risks posed by police activity on specific geographic areas, whether we should use these tools depends not only on whether they can effectively reduce the observed crime rate, but importantly also on whether they can promise greater security for the residents of the most at-risk neighborhoods.

Patricia J Williams: Race, Human Bodies & The Spirit Of The Law

Thursday, June 27, 7:00pm | Norwich Bookstore

Catastrophic and existential risks from AI must be taken seriously. But the current existential risk discourse is dominated by speculation. In this talk, I’ll dissect a series of fallacies that have led to alarmist conclusions, including a misapplication of probability, a mischaracterization of human intelligence, and a conflation of AI models with AI systems. I will argue that we already have the tools to address risks calmly and collectively and provide a set of recommendations for how to do so.

This talk is based on a chapter of the forthcoming book AI Snake Oil by Arvind Narayanan and Sayash Kapoor.

About Patricia J Williams

Patricia J. Williams is the James L. Dohr Professor of Law Emerita at Columbia Law School and the former “Diary of a Mad Law Professor” columnist for The Nation. She is a MacArthur fellow and the author of six books, including The Alchemy of Race and Rights. She is currently a University Distinguished Professor of Law and Humanities at Northeastern University in Boston.

Ruth Chang: Does AI Design Rest on a Mistake? Thursday, April 4, 2024 4:30pm | Haldeman Hall 41

Two notorious problems plague the development of AI: ‘alignment’, the problem of matching machine values with our own, and ‘control,’ the problem of preventing machines from becoming our overlords, or, indeed, of dispensing with us altogether. Hundreds of millions of dollars have been thrown at these problems, but, it is fair to say, technologists have made little progress in solving them so far. Might philosophers help? In this talk, I propose a conceptual framework for thinking about technological design that has its roots in philosophical study of values and normativity. This alternative framework puts humans in the loop right where they belong, namely, in ‘hard cases’. This framework may go some way in solving both the alignment and control problems.

About Ruth Chang

Ruth Chang is Professor of Jurisprudence at the University of Oxford and a member of the Dartmouth class of 1985. Her expertise concerns philosophical questions relating to the nature of value, value conflict, decision making, rationality, love, and human agency. She has shared her research in public venues including radio, television, and newspaper outlets such as National Public Radio, the BBC, National Geographic, and The New York Times. The institutions she lectured or consulted for include Google, the CIA, the World Bank, the US Navy, the Bhutan Center for Happiness Studies, and Big Pharma. Her TED talk on hard choices has over nine million views. She is a member of the American Academy of Arts and Sciences.

2023

Mary Anne Franks: Selling Out Free Speech

October 23, 5 p.m. | Filene Auditorium and Livestreamed

This reductionist and reactionary interpretation of free speech is not contained to the United States, but is rapidly taking hold around the world in part through the tremendous influence of the Internet and related technologies. No industry has benefited more from the sublimation of civil libertarianism into economic libertarianism than the tech industry, which sells the promise of free speech to billions of people around the world in order to surveil, exploit, and manipulate them for profit. The tech industry has accelerated and incentivized life-destroying harassment, irreparable violations of privacy, deadly health misinformation, pernicious conspiracy theories, and terrorist propaganda in the name of free speech.

About Mary Anne Franks

Mary Anne Franks is the Eugene L. and Barbara A. Bernard Professor in Intellectual Property, Technology, and Civil Rights Law at the George Washington University Law School. She is an internationally recognized expert on the intersection of civil rights, free speech, and technology. Her other areas of expertise include family law, criminal law, criminal procedure, First Amendment law, and Second Amendment law.

Franks is also the president and legislative and tech policy director of the Cyber Civil Rights Initiative, a nonprofit organization dedicated to combating online abuse and discrimination. In 2013, she drafted the first model criminal statute on nonconsensual pornography (sometimes referred to as “revenge porn”), which has served as the template for multiple state laws and for pending federal legislation on the issue. She served as the reporter for the Uniform Law Commission’s 2018 Uniform Civil Remedies for the Unauthorized Disclosure of Intimate Images Act and frequently advises state and federal legislators on various forms of technology-facilitated abuse. Franks also advises several major technology platforms on privacy, free expression, and safety issues. She has been an Affiliate Fellow of the Yale Law School Information Society Project since 2019.

Dr. Ruha Benjamin: Utopia, Dystopia, or… Ustopia?…

September 20, 5 p.m. | Oopik Auditorium

From automated decision systems in healthcare, policing, education and more, technologies have the potential to deepen discrimination while appearing neutral and even benevolent when compared to harmful practices of a previous era. In this talk, Ruha Benjamin takes us into the world of biased bots, altruistic algorithms, and their many entanglements, and provides conceptual tools to decode tech predictions with historical and sociological insight. When it comes to AI, Ruha shifts our focus from the dystopian and utopian narratives we are sold, to a sober reckoning with the way these tools are already a part of our lives. Whereas dystopias are the stuff of nightmares, and utopias the stuff of dreams… ustopias are what we create together when we are wide awake.

About Dr. Ruha Benjamin

Ruha Benjamin is the Alexander Stewart 1886 Professor of African American Studies at Princeton University, founding director of the Ida B. Wells Just Data Lab, and author of the award-winning book Race After Technology: Abolitionist Tools for the New Jim Code, among many other publications. Her work investigates the social dimensions of science, medicine, and technology with a focus on the relationship between innovation and inequity, health and justice, knowledge and power. She is the recipient of numerous awards and honors, including the Marguerite Casey Foundation Freedom Scholar Award and the President’s Award for Distinguished Teaching at Princeton. Her most recent book, Viral Justice: How We Grow the World We Want, winner of the 2023 Stowe Prize, was born out of the twin plagues of COVID-19 and police violence and offers a practical and principled approach to transforming our communities and helping us build a more just and joyful world.

Workshops

The Personal and The Computational: Faculty Workshop

October 24-25, 2023 | Wright Center/Neukom Institute

In the fall of 2023, the Wright Center also hosted a two-day international workshop titled “The Personal and the Computational,” organized by Dr. Jacopo Domenicucci, a Neukom Postdoctoral Fellow, and Dr. Susan J. Brison. This event brought together seventeen rising philosophers from across the U.S. and countries such as France, Germany, Italy, Scotland, the U.K., New Zealand, and Canada, to discuss cutting-edge research on computation and just societies.

Workshop sessions covered a range of topics central to understanding the intersection of technology and human agency, including “Digital Personalization and Human Agency,” “Algorithmic Amplification and the Right to Reach,” and “Value Alignment and Trust in AI Tools.” Notably, Dr. Domenicucci presented his work on “Codes and Agency,” adding depth to the discussions on how digital environments shape human autonomy.

The workshop fostered international collaborations, with invited discussants such as Marion Boulicault (University of Edinburgh), Shannon Brick (Georgetown University), Barrett Emerick (St. Mary’s College of Maryland), Melina Mandelbaum (University of Cambridge), Moya Kathryn Mapps (Stanford University), and Kathryn Norlock (Trent University). These discussions not only introduced the Wright Center to a broader academic audience but also laid the groundwork for future interdisciplinary research on the ethical implications of digital technology.

Wright Center for Computation and Just Communities Workshop:

“The Personal and the Computational”

Dartmouth College, October 24 & 25, 2023

All workshop sessions are in the Wright Center/Neukom Institute, 252 Haldeman

Tuesday, October 24th

8:15-9:15am— Breakfast in 252 Haldeman

9:15-9:30am — Introduction by Susan J. Brison and Jacopo Domenicucci

Human agency

9:30-10:00am — Maria Brinker (University of Massachusetts, Boston), “Digital personalisation and human agency” / Chair: Susan Brison

10:00-10:25am — Discussion

5-minute break

10:30-11:00am — Elizabeth Edenberg (Baruch College, CUNY), “Securing Rights in the Digital Sphere” / Chair: Kate Norlock

11:00-11:25am — Discussion

11:25-11:40am — Coffee break in 252 Haldeman

11:40am-12:10pm — Jacopo Domenicucci (Dartmouth College), “Codes and Agency” / Chair: Sonu Bedi (Dartmouth College)

12:10-12:35pm — Discussion

12:35-2:00pm— Lunch in 252 Haldeman

Speech

2:00-2:30pm — Etienne Brown (San José State University), “Algorithmic Amplification and the Right to Reach” / Chair: Jacopo Domenicucci

2:30-2:55pm — Discussion

5-minute break

3:00-3:30pm — Mihaela Popa-Wyatt (University of Manchester), “Online Hate: Is Hate an Infectious Disease? Is Social Media a Promoter?” / Chair: Barrett Emerick

3:30-3:55pm — Discussion

3:55-4:10pm — Coffee break in 252 Haldeman

4:10-4:40pm — Lucy McDonald (King’s College London), “Context Collapse Online” / Chair: Marion Boulicault

4:40-5:05pm — Discussion

Free time

7:00pm — Dinner in Ford-Sayer/Brewster, downstairs at the Hanover Inn

Wednesday, October 25th

8:30-9:30am — Breakfast in 252 Haldeman

Between ethics and epistemology

9:30-10:00am — Marianna Bergamaschi Ganapini (Union College), “Value alignment and Trust in AI Tools” / Chair: Shannon Brick

10:00-10:25am — Discussion

5-minute break

10:30-11:00am — Jeff Lockhart (University of Chicago), “Machines making up people: Machine Learning and the (re)production of human kinds” / Chair: Melina Mandelbaum

11:00-11:25am — Discussion

11:25-11:40am — Coffee break in 252 Haldeman

11:40am-12:10pm — Hanna Kiri Gunn (University of California, Merced), “Why There Can Be No Politically Neutral Social Epistemology of the Internet” / Chair: Moya Mapps

12:10-12:35pm — Discussion

12:35-2:00pm — Lunch in 252 Haldeman—Wrap-up session

Invited discussants:

Marion Boulicault (University of Edinburgh)

Shannon Brick (Georgetown University)

Barrett Emerick (St Mary’s College of Maryland)

Melina Mandelbaum (University of Cambridge)

Moya Kathryn Mapps (Stanford University)

Kathryn Norlock (Trent University)

Organizers:

Susan J. Brison (Director, Susan and James Wright Center for the Study of Computation and Just Communities, Dartmouth)

Jacopo Domenicucci (Neukom Fellow, Dartmouth)